BEING VIGILANT IN THE ERA OF DEEPFAKES

By Poojitha Nakul

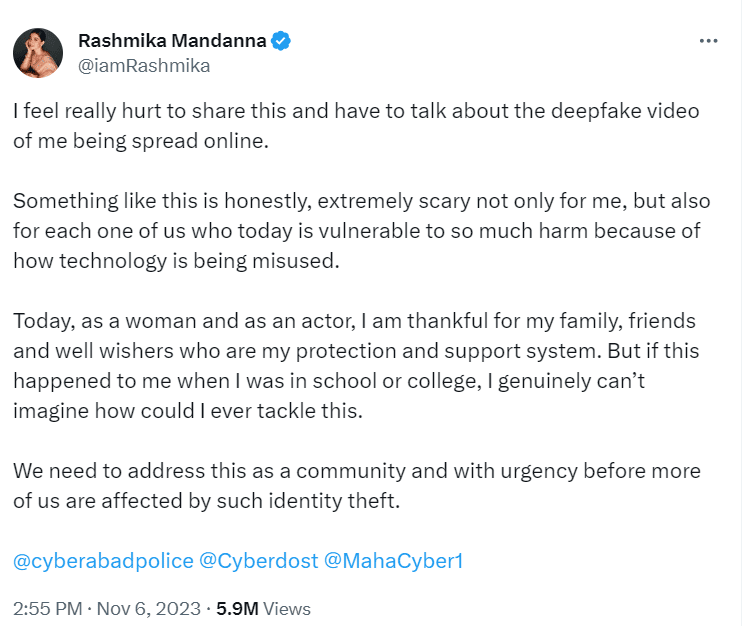

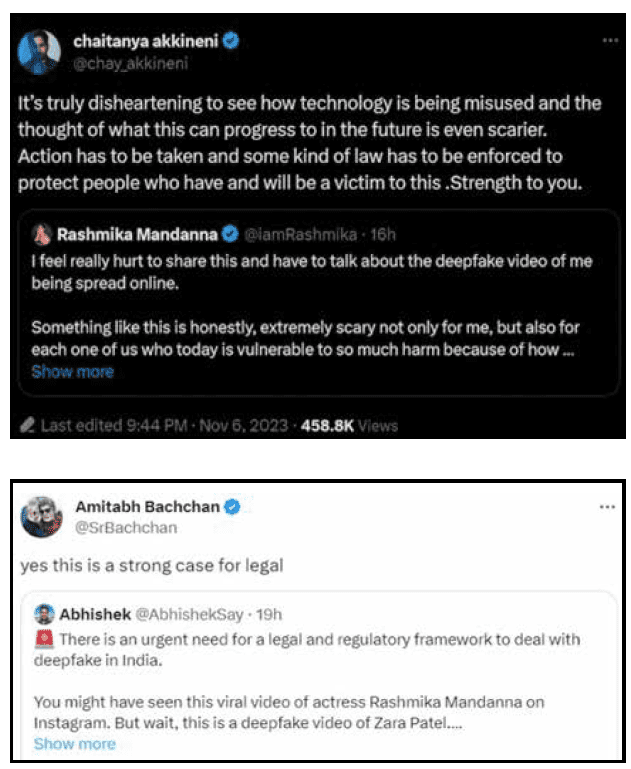

A very simple video of a woman entering a lift has created waves in social media leading ministers and actors to jump in to express worries on abuse of technology. This edited video of famous South Indian actor Rashmika Mandanna is a deep fake video generated by AI (Artificial Intelligence) using a base video of a social media influencer. Deepfake technology allows users to convincingly replace one person’s likeness with another using AI and deep learning algorithms, creating realistic-looking videos, audio recordings, or images that make it seem like a person is saying or doing something they never did. The Rashmika Mandanna look alike video showcased the darker side of the use of AI and the worrying power of social media to spread it like wildfire. Here are a few ways in which you can identify an AI-generated deep fake video:

- Visual Inspection:

- Look for inconsistencies in facial features, expressions, and movements. Deepfakes may exhibit unnatural facial distortions or strange behaviours.

- Pay attention to lighting and shadows. Deepfakes might not accurately reflect the lighting conditions of the environment.

- Audio Analysis:

- Listen to the voice of the person in the video. Deepfake audio may sound synthetic or have unusual fluctuations.

- Check if the audio matches the lip movements and expressions of the person.

- Review Context:

- Analyze the context in which the video is presented. Does it align with what you know about the person or the situation?

- Be cautious of sensational or extraordinary claims in the video.

- Check for Artifacts:

- Look for visual artifacts, blurriness, or unusual distortions around the face or other objects in the video.

- Use Deep fake Detection Tools:

- Various online tools and software can help identify deep fakes. Tools like Microsoft’s Video Authenticator and deepfake detection apps are available.

- Reverse Image and Audio Search:

- You can try reverse image and audio searches to see if the same content has been used elsewhere on the internet, indicating manipulation.

- Metadata Analysis:

- Examine the metadata of the video file for any inconsistencies or anomalies. This can sometimes reveal signs of manipulation.

- Expert Opinion:

- Seek the opinion of experts in fields like computer vision, forensics, or media analysis if you have doubts about the authenticity of a video.

- Source Verification:

- Verify the source of the video. Is it from a reputable and trustworthy channel or source? Be cautious of videos from unknown or suspicious sources.

- Stay Informed:

- Keep up to date with the latest developments in deepfake technology and detection methods. The technology is continually evolving.

In case of a deepfake-related offense, any capturing, publishing, or transmitting of a person’s images in media, violating their privacy section 66E of the IT Act of 2000 is applicable. This offense is punishable with imprisonment of up to three years or a fine of up to Rs. 2 lakhs. Union Minister Rajeev Chandrasekhar clarified that “Under the IT rules notified in April 2023 – it is a legal obligation for platforms to: ensure no misinformation is posted by any user AND, ensure that when reported by any user or govt, misinformation is removed in 36 hrs. If platforms do not comply with this, rule 7 will apply and platforms can be taken to court by an aggrieved person under provisions of IPC.”

Finally, as a user of technology, it’s important to remember that while these methods can be helpful, there is no foolproof way to detect deep fakes, as the technology is constantly improving. Critical thinking, scepticism, and a cautious approach to online media are essential in dealing with the challenges posed by deep fakes.

******

@rashmika_mandanna

@chayakkineni

@amitabhbachchan

@ashwini.vaishnaw

@RajeevChandrashekar

@AshwiniVaishnaw

@iamRashmika

@chay_akkineni

@SrBachchan